Opera Overtakes Competitors: An Artificial Intelligence Feature None of The Others Have!

Opera is launching a new AI feature to improve user privacy that its competitors don't have. Here are the details!

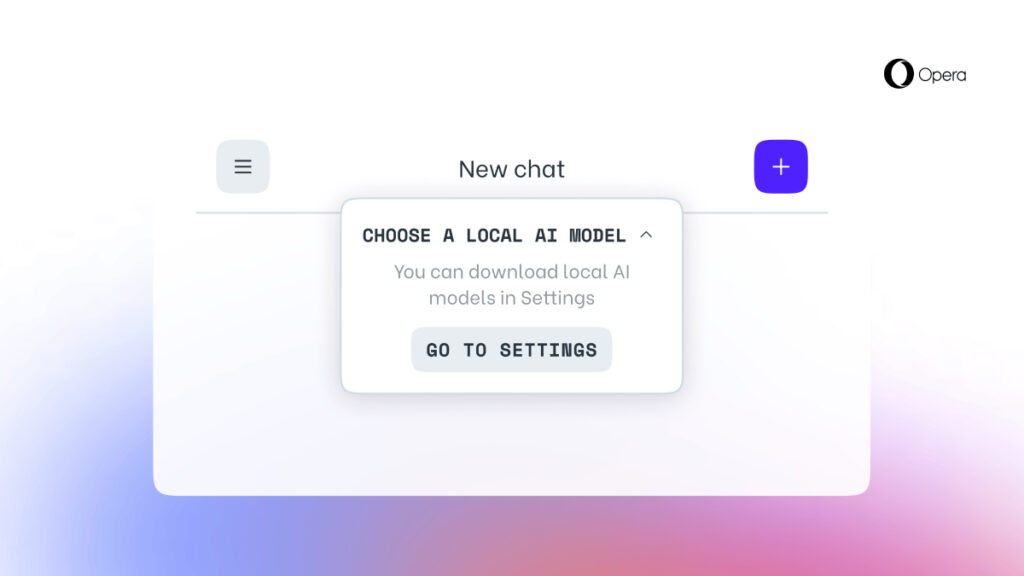

Opera is investing a lot in the field of artificial intelligence and continues its work. Today, the company has introduced another feature that is not available in other browsers. This feature is called the ability to access local AI models.

The developer has added experimental support for 150 local LLM (Large Language Model) variants from 50 different language models, allowing users to access and manage local LLMs from their browser.

How Will Artificial Intelligence Models Be Accessed?

According to Opera, the AI models were developed as an additional addition to Opera’s online Aria AI service. This service is also available in the Opera browser on iOS and Android. Supported native LLMs include Llama (Meta), Vicuna, Gemma (Google), Mixtral (Mistral AI).

The new feature serves to keep browsing as private as possible. The ability to access local LLMs from Opera ensures that user data is kept locally on their devices. This allows them to use artificial intelligence without sending the information to any server.

Starting today, the new feature is available for Opera One Developer users. However, Opera Developer needs to be upgraded to the latest version to enable it.

After selecting a specific LLM, this new feature is downloaded to users’ devices. A native LLM usually requires 2-10GB of storage space per variant. Once downloaded to the device, the new LLM replaces Opera’s native browser AI, Aria.